By Jeffrey Mendelsohn

In theory, there are methods that can be added to 'std::shared_mutex' to improve the lock’s performance in common usage scenarios. For instance, the process of obtaining an exclusive lock can be split into two parts:

1) obtain a shared lock now with the added guarantee to be the only possible exclusive owner, and 2) waiting for all other shared lock owners to release their locks.

In other words, 'reserve' the write lock and 'upgrade' the reservation to the write lock. This separation allows the reserving thread to do read operations while waiting for other readers to release their shared locks. Conversely, there are usage patterns where atomically releasing the write lock and obtaining a read lock, to allow other readers to share the lock, would be valuable.

Is complicating the 'std::shared_mutex' interface with these items worthwhile? How much of a performance increase is reasonably expected in the scenarios where these methods are desired?

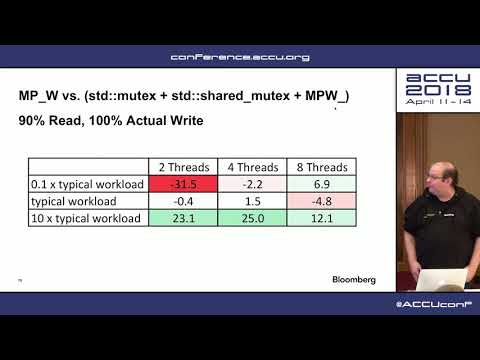

This talk starts with a review of the C++17 specification for 'std::shared_mutex'. Then, a simple competitive implementation for 'std::shared_mutex', that supports these methods, is presented. Finally, benchmark results are analyzed with regard to a three dimensional domain: number of concurrent lock accesses, percentage of read accesses, and duration of lock hold time.

Due to time constraints, all presented benchmark results are from a Linux system.